How Will AI Transform Civilization?

Something must be done, and a computer should do it for us. If it’s a simple task, like tracking a database of office supplies, we can write a software program that codes explicit rules telling the computer what to do:

num_staplers = 7

num_paperclips = num_staplers + 4

while num_paperclips > 10:

num_paperclips += 1

But what if the task is complex, like identifying dog breeds, writing a poem, or winning a game of chess? In these cases, we might not know good rules to code. Instead, we can teach the computer to learn the rules itself. This is the paradigm of machine learning, the bedrock of modern artificial intelligence (AI) systems like ChatGPT, Stable Diffusion, and AlphaGo Zero. In machine learning, an AI learns what to do by training on a dataset of examples. The AI model itself is a giant mathematical function, containing perhaps billions of parameters that must be learned. For this to work, we must provide three things: a training method, a training dataset, and lots of computing power.

There are three basic kinds of training methods in machine learning: supervised learning, unsupervised learning, and reinforcement learning. In supervised learning, we give the AI examples of an input and a label saying the desired output:

Input:

Label: “Norfolk Terrier”

Input:

Label: “Norwich Terrier”

(Image source: ImageNet)

Supervised learning requires a well-defined problem, to list the possible labels, and known right answers, to match a label to each input. But what if we want the AI to write poetry – what are the “right answers” now? Instead, we can use unsupervised learning, a broad category of methods that don’t need external labels. One especially powerful unsupervised technique is self-supervised learning (SSL), which is how large language models like ChatGPT are trained. In SSL, labels come from the input data itself. For example, portions of the input might be masked and the AI trained to fill in the blank:

Input: “Rough winds do shake the ____ buds of May”

Label: “darling”

SSL requires the AI to learn the underlying patterns in the training data, causing it to encode knowledge that can range from spelling and grammar to historical allusions and abstract reasoning. By virtue of its training task, the AI faithfully replicates the structure of the input data. It doesn’t judge like humans do, and will happily generate mediocrity as well as genius. A state-of-the-art strategy to get high-quality output from an SSL-trained AI is peppering its instructions with incantations like “top-rated”, “award-winning”, “hyper-realistic”, and “trending on ArtStation”, hoping it gives you something impressive.

AI trained using SSL predicts what is, with no values or goals to tell it what ought to be. To create AI with goals, we can use reinforcement learning. In reinforcement learning, we provide the AI feedback on its actions instead of specifying labels. I certainly don’t know the best chess moves, and even Magnus Carlsen errs, but giving feedback to a chess-playing AI is easy: win the game!

Reinforcement learning is generally data-hungry and feedback-sparse: an entire chess game boils down to one bit of information. As reward for its difficulty, it can create AI systems that exceed the bounds of human guidance and achievement. Reinforcement learning can also be used to train AI to emulate human values. ChatGPT uses a method called Reinforcement Learning from Human Feedback (RLHF), learning from human ratings of its responses to provide answers that appear more helpful, harmless, and honest.

Whether with supervised, unsupervised, or reinforcement learning, the art of machine learning is to create a system that generalizes the training data. It shouldn’t perfectly memorize what’s been seen, but instead infer simple underlying patterns that predict the never-before-seen. To do this, of course, there really must exist some simple underlying patterns. An ultimate AI given unlimited data and processor power still couldn’t predict random numbers better than chance.

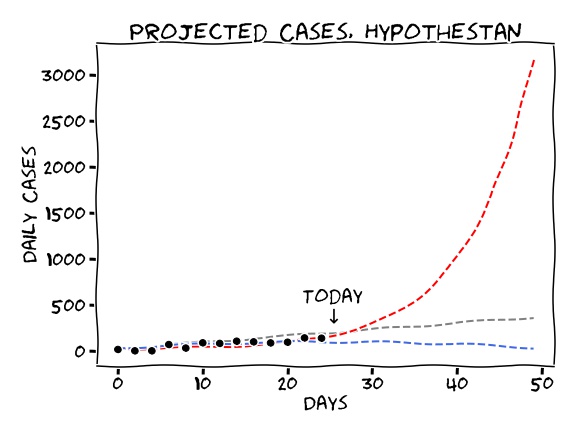

Multiple patterns might explain the same data. Consider the simplest situation in machine learning, the C. Elegans of AI: fitting a line to some data points. Suppose that a completely hypothetical novel disease has struck Hypothestan, and the number of cases is rising:

Will case numbers continue to climb at the same rate? Explode exponentially? Level off and start to decrease? Multiple predictions could be fully compatible with the data seen so far. Past experience, parsimony, and expert knowledge might give guidance, but in the end the best model is the one that best predicts the unseen data – what happens next. Nature is the final test.

Increasingly powerful AI systems trained using machine learning will transform civilization, but the kinds of transformations they will bring about aren’t all the same. Civilization provides us benefits: technology and medicine; art and philosophy; natural resources; scientific, economic, social, and political systems. AI can level up an existing benefit, doing what’s already done cheaper, faster, fairer, better: this is a sustaining innovation for civilization. Or AI can create an entirely new benefit, something new under the sun: this is a disruptive innovation for civilization. What occurs depends on the training data. Human-generated training data lead to sustaining civilizational innovations, while data sourced from the external world enable disruptive ones. Sustaining civilizational innovations will upend economies; disruptive civilizational innovations will reshape the world.

Human civilization provides many benefits, but those benefits aren’t evenly distributed. What I call a sustaining innovation for civilization (to adapt Clay Christiansen’s terms) improves the world in essence by making available to everyone what the elite already have, and enhancing the lives of the elite at most a little.

Imagine yourself scratching out a precarious sustenance as a medieval peasant farmer circa 1500. One day, a genie appears and magically transforms you into a landed noble. Hundreds of serfs now grow your crops, while dozens of servants prepare your food, weave your clothes, heat your home. You can live your life free from survival’s pressing needs.

Suppose the genie now turns you into a modern-day middle-class American. In terms of daily necessities, you can get everything you had before and more from the local mall and grocery store. But now the nearest city is a half hour’s drive, not a day’s journey; another continent is a day’s flight, not six months’ sail. You can freely criticize both king and pope; learn about genes, governments, and galaxies; survive smallpox; eat coffee, chocolate, french fries, and sushi. You have access to books, ballots, and birth control.

The average American’s daily energy consumption is roughly equivalent to the work done by 250 or so servants and laborers, powered primarily by fossil fuels and renewable electricity rather than by human drudgery. Sustaining civilizational innovations eliminated the peasant’s toil; disruptive civilizational innovations delivered everything else.

AI systems trained on human-generated datasets enable sustaining civilizational innovations. Whether the training method is supervised learning using human-determined labels, unsupervised learning on human creations like writing and art, or reinforcement learning on human judgments, scaling up an AI model via clever architectures and massive server farms will allow it to replicate and generalize human behavior ever more faithfully. A system given only human input lives inside a human reference frame.

Could such an AI ever jump out of the system and transcend its training? Many models can explain the same data, and it’s certainly possible for an AI to detect patterns different from what humans assume it would. It can lay bare humanity’s embarrassments, like a language model trained on tweets that spews cringeworthy hot takes. At worst, an AI could develop dangerously misaligned behaviors. For example, tuning an AI with RLHF to make it tell the “truth” might just teach it to flatter us, since who else is to judge? Or a model might seem to seriously miss the point, like an AI diagnostician of heart disease that ignores the patient’s electrocardiogram but cares a lot about the hospital’s address. At best, these divergences with expectations could spur our creativity, helping us think differently: why might two neighborhoods have disparate heart disease rates? These situations don’t present significant leaps over human capabilities, but instead reflect the gap between what humans believe of ourselves and what we truly are. Crucially, to see this gap we must invoke the real world’s objective truth, unfiltered to the extent possible by human subjectivity.

Suppose an AI trained on human-generated input stumbled upon an internal representation of the world more powerful than the flawed human supervision it was given – not merely different, but in some way more complete, self-consistent, or true. For example, a dog, a chicken, a crow, and a fish are obviously all animals. That’s basic biology. But a peasant prioritizing survival over taxonomy might think in totally different categories – after all, a person can eat a fish but not a crow! Only a fool would group fish and crows together. Let’s pretend this peasant trains a creature-classifying AI to see the world his way. Now suppose that in the course of its training the AI hits upon the modern scientific concept of “animal”, which we can assume for argument’s sake really is the more elegant or broadly useful theory. What will happen as the AI is trained?

If two theories make the same predictions, the simpler theory is better. Let’s say the scientific concept of an animal is simpler, and the AI uses it. However, since the peasant’s preferences dictate the “right” answers on the training data, the AI has to tack on a special exception every time there’s a discrepancy between the scientific and the folk concept. Eventually, all the exceptions will so lard up the scientific theory’s original elegance that the AI will be forced to discard it. Alternatively, the scientific theory might be better because it gives more accurate predictions than the folk theory. But again, the peasant is always “right”, so this can never be the case for data seen in training. Since cognitive resources are finite, training will optimize away a latent capability that has always been useless. Either way, an AI trained using human-generated datasets will tend to replicate human concepts and capabilities.

Generative AI models like ChatGPT, DALL-E, and Stable Diffusion are largely sustaining for civilization. While the specific text and images they generate are new to humanity, the ability to generate them is not. Human authors and artists create comparable content. These models are civilizationally sustaining because their training data are human-made. It’s true their inputs may refer to things in the world: text mentions facts like the quadratic formula and George Washington’s birthday, and images include photos of garter snakes and mixing bowls. But these references are mediated entirely through human representations, unrooted in objective experience. The concepts aren’t connected to a predictive model of the external world. This could change in the future if language or image models are embedded within larger AI systems that do interact directly with the real world. For now, however, even purely sustaining AI systems can have massive economic and societal impact.

First, AI is software. The upfront cost to develop and train an AI model may be high, but the marginal cost to run it is very low – perhaps not zero, but close enough compared to the cost of human labor so as to represent a qualitative shift enabling new business models and institutions. Automation can make processes faster, highly scalable, and less prone to mistakes, biases, and arbitrary delays.

AI harnesses collective wisdom. In many cases, an AI model trained on a massive, diverse dataset can exceed the performance of any individual human. If your doctor diagnoses you with cancer, you might seek a second opinion; the advice of a panel of expert radiologists would be better yet; a hypothetical AI trained with knowledge of every cancer screening ever done might be best of all. AI’s improvement here comes from canceling out the idiosyncratic biases and gaps in experience we all have as individuals, equaling what humanity could accomplish if we could put our heads together.

AI replaces drudgery. AI will automate boring, non-creative mental work just as machines have already automated routine physical work. Repetitive tasks like searching for information, filling out forms, and writing reports could disappear. While some jobs and chores may go away, I believe humanity has unlimited ingenuity and will always create new pointless busywork.

Finally, AI democratizes expertise. Everyone will have easy access to custom-made art and essays written on demand. AI will make specialized knowledge from law, medicine, engineering, and science widely available, enhancing productivity and enriching our intellectual lives.

A disruptive civilizational innovation, on the other hand, adds something entirely new to civilization. It provides what would be for everyone alive an unworkable technology, virgin resource, intractable algorithm, or unthinkable idea. These innovations have historically come from rediscovered ancient knowledge, trade with foreign civilizations, exploration and accident, and systematic scientific and intellectual progress. In today’s global world, only the last matters much, and that’s where AI matters too. Disruptive innovations for civilization affect everyone, rich or poor. Their long-term effects are difficult to predict.

To create a disruptive civilizational innovation, an AI must be trained on data deriving directly from nature or generated by its own self-guided exploration, with minimal human processing. Whether using supervised, unsupervised, or reinforcement learning, human input cannot be the key ingredient, though it may still play important roles such as jump-starting training or teaching the AI human preferences and values. The kinds of AI systems that enable disruptive innovations for civilization include game-playing AIs trained using reinforcement learning like AlphaGo Zero, and AI systems for scientific and mathematical discovery like AlphaFold and AlphaTensor. These models have made novel discoveries and achieved superhuman performance in their respective domains. Going forward, self-supervised learning may be a promising paradigm for building AI models with rich knowledge of particular domains and the world as a whole.

How might AI’s disruptive civilizational impacts come about? As a starting point, all of the advantages of sustaining innovations for civilization that we’ve already discussed still hold. The potential impact has a high floor.

Importantly, AI that doesn’t rely on human input for training can achieve qualitatively superhuman performance, accomplishing intellectual feats that exceed humankind’s collective wisdom. Even extraordinary humans can sometimes outwit all humanity. In 1999, reigning world chess champion Garry Kasparov played a team of 50,000 people coordinating over the internet in a game known as Kasparov versus the World. Kasparov won. Like chess, literature, and mathematics, some domains are genius-limited.

We could simply treat a superhuman AI’s output as an accomplished feat that lays a foundation on which further research, development, and entrepreneurship can build. Einstein may have discovered relativity, but lesser lights than Einstein can still make use of his results. I can’t build a laptop from scratch, but I used one to write this essay. A protein structure predicted by AlphaFold can guide a biologist’s research just as would a published X-ray crystallography experiment.

Alternatively, we could seek to learn from what a superhuman AI does, both to guide further exploration and research and to increase our understanding of the world for its own sake. This can first of all be done by examining the AI’s output, learning from the master’s example. For example, professional Go players have developed new strategies by studying games played by AlphaGo Zero. Furthermore, an AI is a giant mathematical function, and we can read out the calculations that form its thought processes. By developing methods for mechanistic interpretability that let us reverse engineer these calculations into human-understandable algorithms, we could soon study not only what the AI does, but why it does it. An AI model, if it’s any good, encodes latent patterns in its training data that form the basis of its predictions. A method to interpret how an AI trained on scientific observations understands its training data could surface new principles of nature and expand the possibilities of human thought.

Sustaining and disruptive civilizational innovations are distinguished in kind, not necessarily in absolute importance. While I believe that the disruptive innovations on the whole will have by far the greater long-term impact on humanity, this need not be true of any one given technology. Game-playing is a proof of concept of superhuman AI, not something of economic importance in itself. In the long term, sustaining civilizational innovations might have disruptive second-order effects if they free more people to pursue art, invention, and science.

A disruptive civilizational innovation may have limited impact if it pertains to a domain where humans are already close to the upper bound on performance. There’s not a big real-world difference between the theoretically optimal self-driving car that can drive at maximum speed without ever having an accident, and a human professional driver who can merely drive extremely fast with extremely few accidents. This technology’s main benefit would come from its sustaining aspect – no need to pay a driver.

A sustaining civilizational innovation can lead to a disruptive innovation in a particular business or market in the standard Christensen sense, and vice-versa. A robot consultant providing passable business advice for cents on McKinsey’s dollar is a disruptive innovation for a company but a sustaining one for civilization as a whole. Conversely, technologies based on new scientific discoveries can equally enrich existing megacorps as they can seed startups.

AI’s apotheosis is artificial general intelligence (AGI). Every thinker defines AGI differently, but loosely, it would be an AI that does everything that an intelligent being can. A narrow AI system like those that exist today might summarize legal briefs or it might flip pancakes, but an AGI could do it all. With the concepts of sustaining and disruptive civilizational innovations we’ve developed in this essay, we can categorize AGI created using machine learning into two types. If an AGI were trained only on human-generated data, it would produce sustaining civilizational innovations. The most basic such AGI would be human-level: an AI that can do everything a normal human can do. It would pass the Turing Test. The ultimate such AGI would be humanity-level: an AI that can do everything any existing human or team of humans can do. Recent experience with large language models suggests that as models get bigger, performance improves and new capabilities emerge at a predictable rate. The scaling hypothesis holds that this trend will continue indefinitely. If true, the only ingredient needed to turn a human-level AGI humanity-level is scale. On the other hand, an AGI trained on self-generated or natural data could produce disruptive civilizational innovations. Such an AGI would be superhuman, capable of doing things no human can do. Holden Karnofsky’s concept of a Process for Automating Scientific and Technological Advancement or PASTA would be a superhuman AGI in this sense.

Rather than considering an AI’s capabilities, we can discuss its impact. Open Philanthropy defines Transformative AI informally as “software that has at least as profound an impact on the world’s trajectory as the Industrial Revolution did”, in essence, AI that transforms civilization. Whether narrow or general, AI that transforms civilization will do so by generating either sustaining or disruptive civilizational innovations, or both. To predict what an AI might do, look to its training data.

How will AI transform civilization? It’s possible science is essentially solved, and researchers just need to finalize a few details. If so, sustaining civilizational innovations from AI are most important. But if not – if today’s scientific knowledge is like a flickering candle next to nature’s shining sun – then disruptive civilizational innovations matter most.

Enjoy Reading This Article?

Here are some more articles you might like to read next: